What? Yet Another Data Newsletter?!

Welcome to my first venture into publishing on Substack! Since this is my first attempt at publishing any content (after a very long time), I thought I would start with a brief introduction. I'm Alex, and for the past five years, I've worked in the world of alternative data. Data is my passion, I always loved to roll up the sleeves and dig into new datasets. Having recently stepped away from a full-time role, I'm using this newfound freedom to kick off a newsletter where I'll share insights from some my personal hands-on projects.

The most recent personal project that has really grabbed my interest involves web-scraped data. I've dedicated considerable time to collecting and analysing this data, exploring its potential to provide investors with deeper insights into the performance of publicly traded companies. What has always excited me about this topic is not so much how we collect data but what we can do with it. In upcoming newsletters, I'll primarily focus on the insights and applications of web data (rather than the technical aspects of web data collection). Additionally, I plan to explore a variety of other topics related to alternative data, sharing my hands-on exprience.

For those interested in the technical details of web scraping, I highly recommend following experts in this field, such as Pierluigi Vinciguerra from Data Boutique/Web Scraping Club, who has a very informative newsletter where he regularly discusses detailed technical nuances of web scraping.

One critical aspect I would like to acknowledge early on is the ethics and compliance surrounding web data collection, especially when data is collected for investment research purposes. Compliance is a complex and rapidly evolving field, and anyone planning to engage in this area professionally should seek formal legal advice. From my personal experience, and through my involvement in a number of projects in this space, I've learned some fundamental rules that most industry participants should be aware of and adhere to:

Do not collect data that is not publicly accessible (i.e., any data that requires login)

Avoid collecting any personally identifiable information, such as social network profiles

Do not collect and use any copyrighted material

Do not misreprepresent yourself (no use of fake profiles)

Ensure not to disrupt normal website operations

Do not use unethically sourced proxies

Try to steer clear of data that requires agreeing to any form of “clickwrap” agreement; if unavoidable, ensure the terms are reviewed by compliance experts.

This list is not exhaustive or definitive; it represents my understanding of some of the basic principles followed by most reputable players in this field.

What Is Our Goal?

Using web data as a form of alternative data is not a new concept. Industry pioneers like YipitData began with scraped data before expanding into other data sources and to the best of my knowledge they continue to use web data in some of their research today. However, I believe we are far from exhausting the potential of web-extracted data.

A good starting point when approaching any dataset is to consider the specific questions it can help us answer. What kinds of questions can web-extracted data help us address? Naturally, this depends on the specific data we're collecting. One of the most common and easily understood applications of web data collection is monitoring pricing and discounts on e-commerce sites. By collecting pricing data, we can analyse trends in discounting. For example, if we observe deeper discounts this year compared to last, we might infer potential impacts on profit margins. However, this approach often leads to hypotheses that are difficult to validate. For instance, without additional context, it is difficult to determine the precise impact of heavy discounting on overall sales and profits.

Investors are often more keen on tracking key performance indicators like revenue and earnings, which can be directly validated against metrics disclosed in quarterly reports. These metrics serve as a 'ground truth' that helps validate the accuracy of our data. In upcoming posts, I'll share examples and methods that demonstrate how web data can provide an early insight into these KPIs.

Going into the Weeds

As this is the first post of our series, I’ll dive right into the details and share specific examples of the data we can source, as well as the transformation steps necessary to make it useful. This post will serve as a baseline reference—if people ask about data origins in the future, I can direct them here.

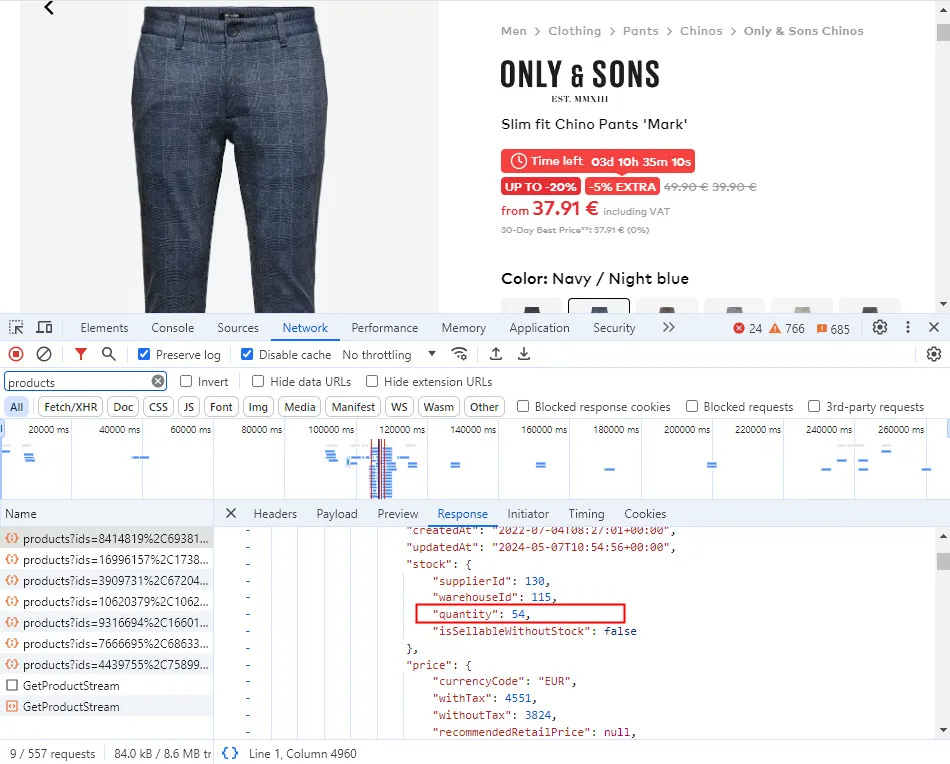

Many e-commerce websites today are quite complex, hosting more data than initially meets the eye. For instance, some websites display inventory levels embedded within their web pages. Intuitively, inventory levels can reveal a lot: by tracking changes over time, we can try to estimate SKU-level sales, which could provide significant insights into a company’s performance. Consider the case of AboutYou (YOU.DE), a German e-commerce retailer. On their product pages, you can typically see the quantity remaining for each product variant, including size and color.

Assuming we have established a method to collect this data, using a scheduled process that runs at regular intervals—we can then accumulate it over time. Once we have sufficient historical data, a quick visual analysis often reveals patterns. For example, the inventory levels for a specific product might fluctuate hourly as shown below:

We observe some obvious patterns in the data: typically, inventory levels decrease, as expected. However, we also notice several sudden negative and positive “jumps”.

To better analyse these trends, let's convert this timeseries into a series of differences by calculating changes from each preceding period. If our goal is to track sales, as the first step we can exclude any positive jumps in inventory levels. While the cause of these increases is uncertain, we know for sure that they do not represent sales.

Next, we address remaining outliers in the data. No method guarantees 100% accuracy in filtering anomalies, but one effective technique that I've find works reasonably well is the Median Absolute Deviation filter. This method involves calculating the median of the data and then measuring the deviation of each point from this median. For more in-depth information on how this technique works, I recommend checking Crazy Simple Anomaly Detection for Customer Success.

Lastly, we must handle missing values, which might result from our prior filtering or from issues during data collection. There are many ways we can do it and usually the most simple techniques work best. As a side note, when we apply these transformations, we need to ensure that we do not “leak” any future data into our calculations. If the plan is to use this data on an ongoing basis, then “the future” will not be known at the time of the analysis. For example, when we deal with missing values, we need to make sure that we only rely on the trailing data that would have been known at any specific point in time.

The cleaned timeseries, post-filtering and handling of missing data, appears as follows:

After applying this transformation across all SKUs and combining the number of units sold with pricing, we can approximate SKU-level sales. It's always beneficial to sanity check our data by looking for expected patterns such as Black Friday sales spikes or other seasonal behaviors typical for the industry. Confirming these patterns adds another layer of validity to our signal.

In upcoming posts, I will explore the insights we can derive from this data, using some specific companies as examples.

Thanks for the mention!